Award-Winning Chicago Personal Injury Lawyer - Securing Justice

for Illinois Injury Victims - Over $450 Million Recovered

Families across the country are pursuing Roblox sexual abuse lawsuits after learning that sexual predators used Roblox and connected apps like Discord to groom, exploit, and harm children.

At Rosenfeld Injury Lawyers, we are leading efforts to hold Roblox and other technology companies accountable when they fail to protect minors, and we are here to stand with families seeking answers, justice, and meaningful reform.

Children and teens deserve safe online spaces, especially on platforms designed and marketed for young users.

Yet, disturbing patterns of grooming, coercion, and sexual exploitation have emerged across Roblox, exposing major failures in content moderation, age verification, and child safety systems. Many victims and parents had no idea the danger existed until the unimaginable happened.

If your child was targeted, manipulated, or exploited on Roblox or Discord, our dedicated legal team can help. Our sexual abuse lawyers combine trauma-informed advocacy with deep experience in child sexual abuse litigation to guide families through every step of the legal process.

You can trust us to listen, to protect your child’s privacy, and to fight aggressively for accountability and compensation, so your family can begin to heal and move forward.

Discord is a free application and web platform for instant messaging, voice chat, and video communication. It was initially designed for gamers, but its use has expanded quite a bit, becoming a popular social platform for teens and young adults to use for communication. Communication can be in groups or private messages. Users join or create ‘servers, ’ which are private or public online spaces focused on particular communities, video games, issues, or friend circles. Within servers, there are chat rooms called “channels” for text or voice, and “direct messages” for private, person-to-person contact.

Discord is available on phones, computers, and directly in a web browser. It allows for the sharing of text, images, audio, video, and live streams.

Because Discord allows virtually anyone to join servers or communicate through private/group chats, risks include:

Cases involving harassment, threats, or trauma on Discord can become subject to potential civil lawsuits on behalf of families when their children are harmed.

Roblox is an online platform and game creation system where users make, play, and share interactive 3D games (“experiences”). It is often described as a virtual universe.

Each Roblox player creates an avatar (a virtual character), explores games or workplaces built by others, and shares their own 3D-designed experiences. While you can play alone, most engagement occurs in multiplayer games, where chatting, building, trading, and indirect communication are regular activities.

With its real-money currency “Robux,” users (and even minors) can buy clothes and “skins” or trade/sell custom-designed content.

Anyone can send or receive friend requests, join private or public games, and communicate via in-game chat or group messages. Parental controls are available, but they require careful management.

Roblox also has some serious online child safety risks that need to be considered, including:

Despite Roblox’s moderation system and content filters intended to catch profanities, sexually explicit images, and safety risks, the game’s millions of daily users present extensive opportunities for strangers to interact unsupervised.

Roblox has seen documented instances of grooming and exploitation by adults preying on minors. Some predators use in-game virtual currency, gifts, or the perceived friendship of avatars to manipulate kids.

Despite controls, adult or older users sometimes creatively bypass filters for sexual, violent, or hateful content. Roblox games can also include hidden sexual content, such as “condo games” and in-game “strip clubs,” which are specifically designed to bypass moderation and simulate inappropriate or adult-themed scenarios. These environments are often well-documented in news articles and are one of the most common and alarming ways children can be exposed to grooming or explicit roleplay in disguise.

Outside links, phishing scams, and unfavorable trades may lead to Robux or rare items being wrongfully taken from children, sometimes via threats or scams.

Roblox and Discord often go hand in hand for young gamers and online communities, even though they are separate platforms. Roblox provides the virtual world and games, while Discord serves as an external chat and voice hub where players can communicate in more depth, organize teams, share strategies, and connect socially outside of Roblox’s built-in chat options. Many Roblox groups have their own dedicated Discord “servers,” allowing users to communicate with each other.

Playing Roblox might seem like harmless fun, but the platform’s social features, user-generated games, and open chat systems can expose children to surprising risks. Understanding these dangers helps parents spot red flags early and take steps to keep their kids safe online.

Parents should understand that kids on Roblox can encounter chat and voice messages containing sexual, violent, or otherwise inappropriate language. Online predators often use coded language, obscure emoji combinations, and intentional misspellings to sidestep the platform’s filters and surveillance tools.

Predators looking to groom a child rarely move fast – instead, they create bonds by giving compliments, patiently chatting, gifting in-game currency like Robux, and regularly checking in. Slowly, they isolate a child from healthy online friendships and introduce the idea of “special secrets” or loyalty to only them.

As trust builds, abusers encourage secrecy, suggesting kids use different (alt) Roblox or Discord accounts, hide chats from adults, and look for spaces where parental oversight doesn’t reach. Transitioning the conversation to a less-regulated platform, such as Discord, is a common next step.

Abusers don’t usually go straight to threats or inappropriate requests. They start by slowly and subtly testing the boundaries of the children they’re interacting with. This could be subtly inappropriate conversations or jokes, or suggestions to engage in role-playing. The goal is to test a child’s reactions and push them when it seems like they will go along with what is being suggested.

Once a relationship is established, they might start to request nude photos or videos from children.

Sextortion is a type of blackmail specific to sexual/private images, with perpetrators telling kids they’ll reveal images or sensitive chats to family and friends unless the victim sends more, performs live, or shares money/information.

A constantly present danger is abusers who request to meet children offline in real life. This can lead to serious emotional and physical harm.

It can be helpful to understand some of the specific scenarios that exist to expose children to harm when using Roblox and other similar platforms.

Some of the most troubling are “condo” games – a nickname for private or hard-to-find Roblox worlds designed specifically for sexual or adult content. These games often appear under innocent names and may simulate apartments, NPC clubs, or social pods. Despite ongoing efforts to ban them, hosts uninstall and recreate them, luring thousands of curious children into explicit virtual environments.

Certain user-created nightclub or “dance party” games blur the boundaries, featuring avatars flirting, pairing off, conducting online ‘dating,’ or role-playing arrangements which normalize adult behavior and construction of relationships too complex or inappropriate for a young audience.

There are rooms on the platform that are meant to recreate therapy or support group spaces. This often attracts children who are shy and need support, often eager for approval. Adult predators pose as mentors and then sometimes steer discussions toward secrecy and inappropriate content. This can progress to questions and confessions of a highly personal and inappropriate nature.

Kids desperate for Robux (the game’s paid currency) are vulnerable when traders or supposed “friends” suggest exchanging exclusive goods or rewards for dares, personal details, or inappropriate images.

Several warning signs may indicate that your child’s safety or well-being is at risk on Roblox or connected platforms, such as Discord. Keeping an eye out for these red flags can help you intervene before the situation escalates:

Identifying these signs early enables parents to address problems and, if necessary, explore potential legal remedies.

If you’re noticing any of the above signs and think your child has been harmed on Roblox or Discord, there are certain steps you should take right away. Consider the following:

Begin by having a gentle conversation with your child that prioritizes their emotional safety and reassurance. Avoid blaming them for anything – your goal is to make them feel supported so they feel comfortable sharing what happened.

Document everything by taking screenshots of chats, recording usernames, writing down dates, saving account information, and noting any suspicious content. This evidence is important whether you choose to report to authorities, to the platform, or explore legal avenues.

Act quickly to protect your child’s digital presence by changing passwords on Roblox and Discord, activating two-factor authentication where possible, reviewing all friend/follower lists, and securing or closing extra (alt) accounts. This helps prevent further contact between your child and an abuser.

Use reporting tools offered within Roblox and Discord to flag inappropriate users or content, and don’t hesitate to make an official report to local police. Severe cases should also be reported to national agencies like the FBI or a cybercrime tipline designed for child exploitation prevention.

Harmful encounters online can lead to real trauma, even if your child never met the abuser in person. Reach out to an experienced mental health professional to ensure your child receives the care they need. If they did meet the abuser in person, make sure they get medical care if needed as well.

If your child experienced exploitation or significant online or physical harm, consult a knowledgeable attorney as soon as possible. Time limits, evidence, and strategy are critical for these cases. An experienced lawyer will walk you through possible legal claims you and your family may have against either the abuser/or the online platforms. Our team is always here to help you.

Major media investigations, research reports, and law enforcement agencies have documented severe, and quite frankly, terrifying, gaps in Roblox’s ability or willingness to keep minors safe on its platform. Much of what we know has come only when outside journalists or regulators applied pressure, not because the company acted on its own. Here’s what independent reviewing bodies, analysts, and global authorities have found.

Bloomberg Businessweek launched a months-long investigation involving public records, interviews with victims’ families, former safety staff, and insiders at Roblox Corporation. Reporters documented a concerning pattern: Many adults befriended, groomed, and eventually victimized children through Roblox messaging and games. These encounters sometimes led to real-world abductions or abuse involving dozens of arrests.

With 78 million daily users – 40% under age 13 – Roblox is the world’s most popular playground for kids online. Its huge custom game system welcomes millions of creators, but that openness makes it easy for bad actors to hide among real players without much identity checking or protection.

A notorious developer known as “DoctorRofatnik” built a hit game and used Discord and Roblox to groom teens under the pretense of joking around. Early complaints had little effect. Even after a late Roblox ban, he circled back and was only caught when arrested for abducting a 15-year-old Texas teen he met on the platform.

Investigators found more than 24 criminal cases since 2018, starting with Roblox: adults using flirting and gifts in-game, luring kids to Discord/Snapchat, and often exchanging Robux for nudity, sometimes with children as young as 8.

This report points to significant safety problems:

Courts and enforcement agencies are likely to argue that Roblox had what lawyers call “constructive knowledge,” meaning they could have prevented harm.

Hindenburg Research, a well-known investigative firm, focused its attention on Roblox Corporation to assess the truth behind the company’s financial performance, user engagement numbers, and its ability to keep children safe.

Roblox is massively popular with children worldwide and has advertised itself as a “safe and positive” digital playground. Hindenburg set out to test these claims, motivated by concerns reported by families, investors, and child safety advocates.

The central allegations that relate to the Hindenburg Report include: Roblox systematically inflates growth and engagement statistics, and the company has created and maintained an online product where exploitation and danger to children are not rare “accidents,” but preventable, ongoing failures. Here’s what has been found:

Roblox frequently promotes its “daily active user” (DAU) stats as evidence of rapid growth. The report alleges that these do not accurately reflect the actual number of unique users. Multiple accounts (”alts”) owned by a single user and bots are counted, artificially inflating these public figures.

Roblox also claims kids consistently spend over two hours on the platform each day. Hindenburg’s investigation found actual, unique log-in/player sessions were much lower – around 20 to 25 minutes per typical user – once bot traffic and duplicate accounts are filtered away.

Former employees shared that two sets of metrics exist: the data used externally for PR (which does not “de-alt” returns) versus more reflective numbers tracked internally. This further clouds the reality of user growth and fails to give families or stakeholders an accurate picture.

Investigative researchers acting as children found chronic risks on high-traffic Roblox games: hidden sexual dialogue, group invitations to stripped-down “condo” experiences, and accessible child pornography and fetish roleplay.

Many violent and inappropriate user-generated content and games slipped past official moderation. Some included graphic abuse, simulated attacks, “strip clubs,” and grooming groups for kids, demonstrating persistent moderation breakdowns.

The platform’s parental controls fell short of expectations. Kids have been able to effortlessly change their age in settings, build or create new “alt” accounts, and bypass many default content protections. Most parents were unaware that this was so easy to do and of how often it was happening.

The report describes the company’s decisions to slow or freeze safety hiring and spending, instead focusing on the company’s growth.

Despite the appearance of success, Roblox Corporation operates at a loss, contrasting with competitor gaming platforms that make sustainable profits.

The report called Roblox an X-rated pedophile hellscape. There are clearly problems that occur when profits are prioritized over child safety.

Federal agencies and police around the world now see Roblox, and related platforms like Discord, as targets and tools for organized child predator groups, not just isolated threats. One of the most notorious is the “764 Network,” uncovered through joint investigations by major U.S. and international news outlets and law enforcement agencies.

The 764 Network is an organized group that has used mainstream apps, including Roblox, to contact children, gain their trust, and then groom, extort, blackmail, or even demand that kids harm themselves. Investigators started tracking this group after finding patterns where kids met new “friends” on public gaming platforms like Roblox.

Predators in the network would then guide children to more private and less-monitored chat apps, such as Discord or Telegram, where they could escalate their abuse.

Not only have U.S. authorities designated 764 as a major transnational threat, but agents in the FBI have confirmed that there are open criminal cases in every major FBI field office involving this network. So far, arrests have been made in multiple countries for child-sex-abuse-related crimes tied to the 764 Network.

Official complaints and indictments also demonstrate that Roblox and platforms like it act as an entry point, with weak enough moderation and easy user anonymity, making them attractive tools for sophisticated predator organizations.

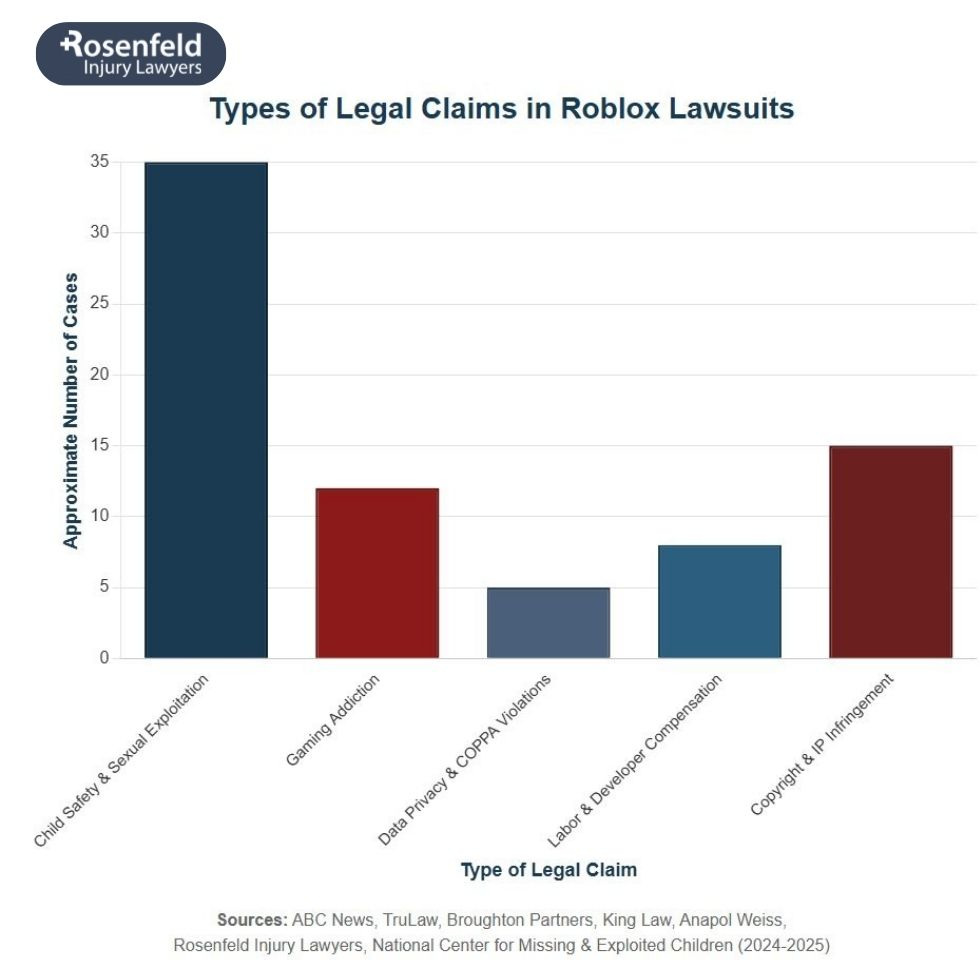

Across the country, a growing number of Roblox sexual abuse lawsuits are revealing the platform’s deep failures to protect young Roblox users from grooming and exploitation.

Families, state officials, and federal investigators have uncovered disturbing evidence that predators used the online gaming platform to contact, manipulate, and exploit minors, often through private chats and connected Discord channels.

The following timeline highlights some of the most significant legal actions, government investigations, and class action lawsuits filed against Roblox Corporation and Discord Inc. for their alleged role in enabling sexual abuse and distributing explicit content involving children.

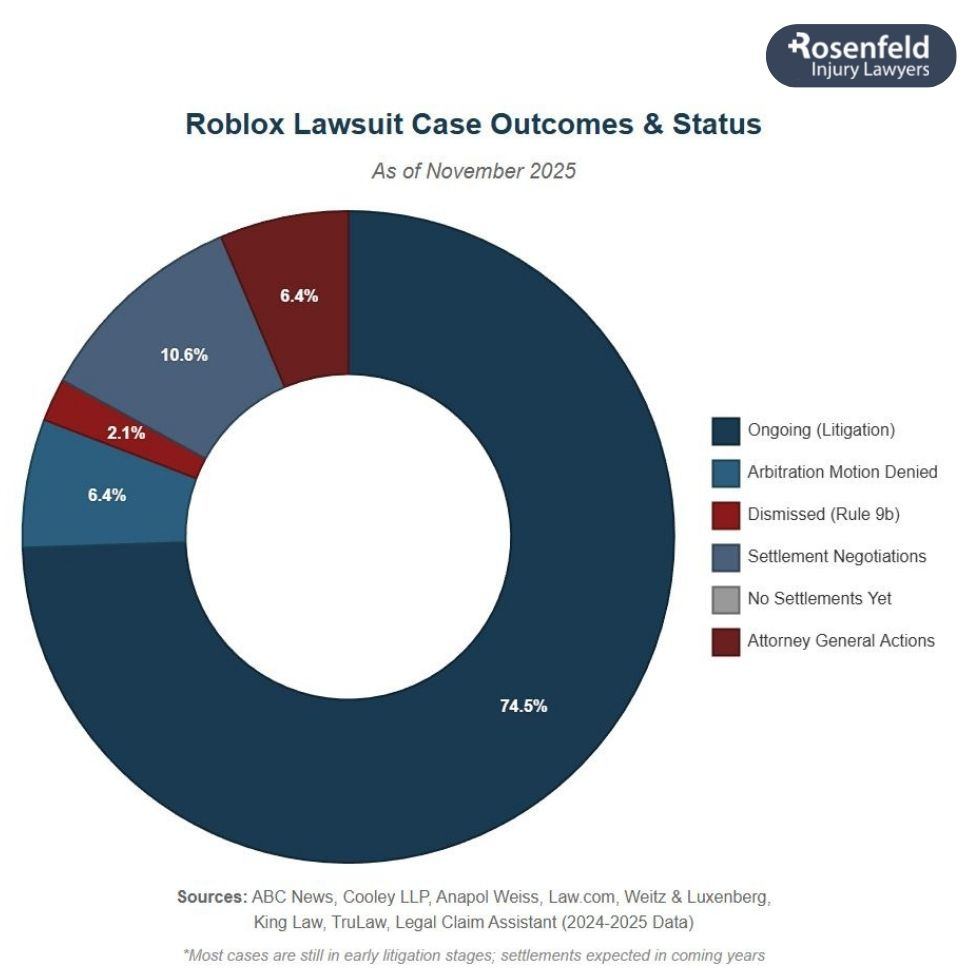

On December 12, 2025, the Judicial Panel on Multidistrict Litigation granted the request to consolidate all federal Roblox child-exploitation lawsuits. The Panel created MDL No. 3166: In re Roblox Child Exploitation Litigation and assigned it to Chief Judge Richard Seeborg in the Northern District of California. This means more than 80 cases are now centralized before one judge for coordinated discovery, motions, and evidence, streamlining the litigation and ensuring consistent rulings across all federal Roblox cases.

In September 2025, plaintiffs filed a motion asking the Judicial Panel on Multidistrict Litigation (JPML) to create a coordinated MDL for all federal Roblox child-exploitation cases. The request argued that dozens of lawsuits shared the same core allegations about Roblox’s design, safety failures, and predator access.

The MDL would allow families nationwide to coordinate discovery, share evidence, and pursue accountability together.

In August 2025, Louisiana Attorney General Liz Murrill filed a groundbreaking lawsuit accusing Roblox Corporation of enabling large-scale child grooming and child sexual exploitation. The complaint alleges that the online gaming platform ignored internal safety warnings, misled parents, and failed to implement even the most basic protections for young users.

According to the lawsuit, Roblox Corporation knowingly allowed its platform to host and distribute sexually explicit content involving minors, creating what investigators described as a “distribution hub” for harmful material. Prosecutors argue that Roblox Corporation prioritized profits over child safety, leaving children vulnerable to predatory interactions.

That same month, a California mother filed suit against Roblox Corporation and Discord, alleging that a 10-year-old girl was sexually exploited by an adult posing as a peer. The predator contacted the child through in-game chats, sent nude photos, and manipulated her into sending images in return.

The lawsuit alleges that both companies failed to detect or stop the ongoing abuse despite their marketing claims of safe, moderated environments for children.

Also in August, authorities charged Matthew Macatuno Naval, 27, after he abducted a 10-year-old girl he met on Roblox and continued communicating with her through Discord. He obtained her address, drove across the state, and took her hundreds of miles away before law enforcement recovered her safely.

A related federal lawsuit names Roblox and Discord for failing to enforce safety features or restrict direct messaging between minors and adults.

A Georgia family filed a lawsuit on behalf of their 9-year-old child, who was contacted by multiple adult predators posing as children. The predators sent sexually inappropriate messages and coerced the victim into sending images. When the child tried to stop, they threatened to share the photos publicly.

The lawsuit alleges that Roblox’s moderation and parental controls were insufficient, allowing the abuse to continue unchecked.

In July, two separate Roblox sexual abuse lawsuits were filed after a 13-year-old girl was taken across state lines, and a 14-year-old Alabama girl was lured from her home. Both cases involved predators who first gained trust through Roblox and then moved conversations to Discord before attempting in-person meetings.

Authorities intervened before further harm occurred, citing Roblox’s lack of monitoring of direct messages and off-platform contact.

Federal agents arrested Christian Scribben in May 2025 for using Roblox and Discord to groom and exploit children as young as eight. Victims were instructed to create sexually explicit photos and videos, which were distributed through Discord servers.

Roblox faced lawsuits claiming the company failed to remove Scribben’s accounts despite repeated user reports and ignored early warning signs of systemic exploitation.

A family sued after a 13-year-old girl was groomed and sexually assaulted by a predator she met on Roblox. According to the lawsuit in Texas, the man used Discord to locate her home and record the assault.

Additional lawsuits filed in San Mateo County, California, and Georgia that month involved minors coerced into producing child sexual abuse material through Roblox’s private chat system. One of these cases involved a 16-year-old Indiana girl trafficked to Georgia and sexually assaulted after meeting her abuser through Roblox.

Two April 2025 lawsuits highlighted the same recurring pattern: predators initiating contact through Roblox private messages and moving victims to Discord for coercion.

One involved Sebastian Romero, accused of exploiting at least 25 minors, including a 13-year-old boy whom he manipulated into sharing explicit images. Plaintiffs allege that both companies failed to verify user ages or monitor unsafe environments, despite being aware of the known risks.

A nationwide investigation uncovered extensive child exploitation occurring across both Discord and Roblox. Among the most disturbing cases was Arnold Castillo, a Roblox developer accused of trafficking a 15-year-old Indiana girl across state lines. During her eight-day captivity, she was repeatedly sexually abused and referred to by Castillo as a “sex slave.”

The investigation also documented other incidents, including a registered Kansas sex offender contacting an 8-year-old through Roblox and an 11-year-old New Jersey girl abducted after being groomed on the platform.

A California class action lawsuit filed in 2022 named Roblox Corporation, Discord Inc., Snap Inc., and Meta Platforms Inc. as defendants for enabling the online exploitation of a teenage girl.

Plaintiffs claim that adult men used these interconnected online platforms to manipulate the minor into sharing explicit material and engaging in dangerous behavior while the companies failed to verify ages or restrict contact between adults and minors.

One of the earliest lawsuits against Roblox dates back to 2019, when a California mother discovered her son had been groomed by an adult user. The perpetrator persuaded the child to send sexually explicit images, highlighting early warnings about the Roblox platform’s vulnerability to abuse.

A Multi-District Litigation brings together lawsuits on a similar subject, in this case, child abuse and online platform safety, so that common questions, pretrial discovery, and legal issues can be handled together. Central Judge and Jurisdiction: All pretrial decisions will be made by Chief Judge Richard Seeborg in the Northern District of California. Each family’s case stays separate for potential trial, but evidence and expert witnesses will be shared between cases for efficiency.

Families’ lawsuits share these main claims:

Unsafe Platform Design: Roblox’s tools, like private messages, avatars, and poor age checks, help predators secretly target and communicate with children.

Neglecting Warnings: Roblox allegedly ignored repeated alarms about child safety from users, campaigners, whistleblower staff, and the media.

Failing Moderation: They allowed “condo games,” unchecked sextortion, inappropriate chats, and widespread grooming to thrive.

False Safety Claims: Marketing that described Roblox as “safe for kids” was untrue, and hidden risks were discovered, misleading both children and parents about real dangers.

Off-Platform Harm Escalation: Predators met victims on Roblox before moving them onto less-regulated sites where the abuse deepened.

Ultimately, many people are arguing that Roblox has violated state consumer protection laws, among others, by exposing children to predators.

The largest number of cases was already stacked in the federal Northern District Court of California. It’s now the predetermined site for coordinating pretrial events. However, if any case must proceed to a jury trial or a full settlement is not reached, the individual claims can be sent back to the plaintiff’s judge or home district for the final trial.

If your family hasn’t filed suit yet, it’s not too late. Fresh cases can be added to the MDL if appropriate.

As one of the most widely used online gaming platforms, Roblox continues to face scrutiny for serious lapses in child safety. Dozens of Roblox sexual abuse and exploitation lawsuits claim that predators exploited weak safeguards, poor moderation, and misleading design choices that left young users vulnerable.

Families say the company failed to protect children from explicit images, unsafe in-game chats, and the spread of inappropriate content, leading to devastating emotional and psychological harm. Below are five major safety breakdowns cited in ongoing actions against Roblox.

Chat and messaging tools remain one of the most significant concerns on the Roblox platform. Instead of providing a fully moderated experience, Roblox allowed users—including adults—to exchange inappropriate messages, send explicit images, and share links to external platforms.

Many lawsuits filed against Roblox claim that predators used these in-game chats to build trust and move victims off-platform, exposing them to sexual abuse and grooming. Despite repeated warnings, Roblox allegedly failed to implement effective safety features to ensure online safety for minors.

Roblox’s user-created content has long been central to its appeal, but it has also opened the door to exploitation. Numerous lawsuits and investigations allege that the company allowed games containing inappropriate content, sexual acts, and other harmful content to remain live for months.

Plaintiffs argue these experiences encouraged minors to share explicit photos or interact with sexual predators under the guise of gameplay. Critics say that this failure to enforce moderation standards demonstrates a disregard for child safety and creates an unsafe environment for millions of vulnerable users.

One of Roblox’s flaws facing mounting criticism is its reliance on self-reported birthdates with no meaningful identity checks. This loophole allows adults to impersonate children and engage with minors directly. In several Roblox sexual abuse lawsuits, predators exploited this gap to groom victims and solicit sexually explicit images.

Attorneys argue that basic safeguards—such as verified parental consent, facial age estimation, and tighter parental controls—could have prevented many of these tragedies. Plaintiffs continue to call for the company to hold Roblox accountable for failing to adopt proven tools that protect minors.

Another recurring issue in the lawsuits against the Roblox Corporation filed nationwide is the company’s sluggish and inconsistent content moderation. Reports indicate that inappropriate content, predatory accounts, and offensive games often remained accessible long after being reported.

Critics allege that Roblox prioritized user growth and profits over child safety, allowing unsafe material to spread unchecked. Families affected by these failures describe lasting emotional distress, saying Roblox’s delayed action made it easier for sexual predators to exploit children.

Many of the most serious grooming incidents began on Roblox but escalated through Discord or other messaging apps. The company’s social features and in-game prompts often encourage players to communicate beyond Roblox, where oversight is minimal.

Multiple lawsuits now claim that this lack of restriction enabled predators to contact and manipulate children privately. Plaintiffs argue that Roblox’s design choices directly contributed to the rise in child exploitation cases, creating real-world consequences and lifelong trauma for victims.

Families filing a Roblox lawsuit allege that Roblox failed in its fundamental duty to protect children from foreseeable harm. These cases focus on how both companies allowed child exploitation to occur on platforms marketed as safe spaces for minors.

Plaintiffs argue that corporate negligence, poor oversight, and misleading assurances enabled widespread abuse that caused severe psychological distress and long-term emotional harm.

Civil claims against these technology companies are grounded in both state and federal law.

A growing number of plaintiffs across the United States claim that Roblox Corporation and Discord Inc. ignored years of internal safety warnings about online predators and unsafe chat features. Each complaint alleges that the platforms prioritized profits and engagement over user welfare, leaving children sexually exploited.

Families pursuing these actions argue that Roblox’s design choices—such as open chat systems and unverified accounts—made exploitation predictable and preventable.

Many lawsuits assert that the gaming platform itself was defectively designed. By failing to implement stronger moderation tools, reporting systems, and remote account management options for parents, Roblox allegedly allowed predators to use its environment to exchange or share explicit images with minors.

Plaintiffs contend that these design failures turned what should have been a child-friendly experience into a global risk for grooming and trafficking.

Numerous lawsuits reference the Child Online Privacy Protection Act (COPPA) and the Trafficking Victims Protection Act (TVPA), two federal laws aimed at preventing child exploitation online.

Attorneys for victims claim that Roblox’s and Discord’s business models encouraged user growth without adequate screening, leading to grooming that violated these statutes. These claims seek to hold both corporations liable for ignoring known dangers within their ecosystems.

Parents say Roblox misrepresented their platforms as “safe for kids” while allowing predators to operate freely. This deceptive marketing practice forms the basis of additional claims for consumer fraud and negligent misrepresentation.

Families argue that the companies knowingly concealed the risks of inappropriate content and unsafe interactions, persuading millions of parents to trust a system that failed to deliver on its safety promises.

Another common allegation is that Roblox failed to warn parents about the high risk of exploitation or to remove dangerous users once reports were filed. Each Roblox lawsuit filed under this theory argues that the company had access to moderation data and chat logs showing predatory patterns but refused to act.

This inaction, families claim, directly contributed to the abuse and compounded their children’s emotional distress.

Defense attorneys often cite Section 230 of the Communications Decency Act, which shields online companies from liability for user-generated content. However, plaintiffs maintain that Roblox went far beyond passive hosting by designing interactive features that enabled and monetized unsafe behavior.

Our attorneys believe that this conduct strips them of legal immunity, allowing families to pursue meaningful accountability through civil sexual assault lawsuits.

A Multi-District Litigation brings together lawsuits on a similar subject, in this case, child abuse and online platform safety, so that common questions, pretrial discovery, and legal issues can be handled together. Central Judge and Jurisdiction: All pretrial decisions will be made by Chief Judge Richard Seeborg in the Northern District of California. Each family’s case stays separate for potential trial, but evidence and expert witnesses will be shared between cases for efficiency.

Families’ lawsuits share these main claims:

Unsafe Platform Design: Roblox’s tools, like private messages, avatars, and poor age checks, help predators secretly target and communicate with children.

Neglecting Warnings: Roblox allegedly ignored repeated alarms about child safety from users, campaigners, whistleblower staff, and the media.

Failing Moderation: They allowed “condo games,” unchecked sextortion, inappropriate chats, and widespread grooming to thrive.

False Safety Claims: Marketing that described Roblox as “safe for kids” was untrue, and hidden risks were discovered, misleading both children and parents about real dangers.

Off-Platform Harm Escalation: Predators met victims on Roblox before moving them onto less-regulated sites where the abuse deepened.

Ultimately, many people are arguing that Roblox has violated state consumer protection laws, among others, by exposing children to predators.

The largest number of cases was already stacked in the federal Northern District Court of California. It’s now the predetermined site for coordinating pretrial events. However, if any case must proceed to a jury trial or a full settlement is not reached, the individual claims can be sent back to the plaintiff’s judge or home district for the final trial.

If your family hasn’t filed suit yet, it’s not too late. Fresh cases can be added to the MDL if appropriate.

As one of the most widely used online gaming platforms, Roblox continues to face scrutiny for serious lapses in child safety. Dozens of Roblox sexual abuse and exploitation lawsuits claim that predators exploited weak safeguards, poor moderation, and misleading design choices that left young users vulnerable.

Families say the company failed to protect children from explicit images, unsafe in-game chats, and the spread of inappropriate content, leading to devastating emotional and psychological harm. Below are five major safety breakdowns cited in ongoing actions against Roblox.

Chat and messaging tools remain one of the most significant concerns on the Roblox platform. Instead of providing a fully moderated experience, Roblox allowed users—including adults—to exchange inappropriate messages, send explicit images, and share links to external platforms.

Many lawsuits filed against Roblox claim that predators used these in-game chats to build trust and move victims off-platform, exposing them to sexual abuse and grooming. Despite repeated warnings, Roblox allegedly failed to implement effective safety features to ensure online safety for minors.

Roblox’s user-created content has long been central to its appeal, but it has also opened the door to exploitation. Numerous lawsuits and investigations allege that the company allowed games containing inappropriate content, sexual acts, and other harmful content to remain live for months.

Plaintiffs argue these experiences encouraged minors to share explicit photos or interact with sexual predators under the guise of gameplay. Critics say that this failure to enforce moderation standards demonstrates a disregard for child safety and creates an unsafe environment for millions of vulnerable users.

One of Roblox’s flaws facing mounting criticism is its reliance on self-reported birthdates with no meaningful identity checks. This loophole allows adults to impersonate children and engage with minors directly. In several Roblox sexual abuse lawsuits, predators exploited this gap to groom victims and solicit sexually explicit images.

Attorneys argue that basic safeguards—such as verified parental consent, facial age estimation, and tighter parental controls—could have prevented many of these tragedies. Plaintiffs continue to call for the company to hold Roblox accountable for failing to adopt proven tools that protect minors.

Another recurring issue in the lawsuits against the Roblox Corporation filed nationwide is the company’s sluggish and inconsistent content moderation. Reports indicate that inappropriate content, predatory accounts, and offensive games often remained accessible long after being reported.

Critics allege that Roblox prioritized user growth and profits over child safety, allowing unsafe material to spread unchecked. Families affected by these failures describe lasting emotional distress, saying Roblox’s delayed action made it easier for sexual predators to exploit children.

Many of the most serious grooming incidents began on Roblox but escalated through Discord or other messaging apps. The company’s social features and in-game prompts often encourage players to communicate beyond Roblox, where oversight is minimal.

Multiple lawsuits now claim that this lack of restriction enabled predators to contact and manipulate children privately. Plaintiffs argue that Roblox’s design choices directly contributed to the rise in child exploitation cases, creating real-world consequences and lifelong trauma for victims.

Families filing a Roblox lawsuit allege that Roblox failed in its fundamental duty to protect children from foreseeable harm. These cases focus on how both companies allowed child exploitation to occur on platforms marketed as safe spaces for minors.

Plaintiffs argue that corporate negligence, poor oversight, and misleading assurances enabled widespread abuse that caused severe psychological distress and long-term emotional harm.

Civil claims against these technology companies are grounded in both state and federal law.

A growing number of plaintiffs across the United States claim that Roblox Corporation and Discord Inc. ignored years of internal safety warnings about online predators and unsafe chat features. Each complaint alleges that the platforms prioritized profits and engagement over user welfare, leaving children sexually exploited.

Families pursuing these actions argue that Roblox’s design choices—such as open chat systems and unverified accounts—made exploitation predictable and preventable.

Many lawsuits assert that the gaming platform itself was defectively designed. By failing to implement stronger moderation tools, reporting systems, and remote account management options for parents, Roblox allegedly allowed predators to use its environment to exchange or share explicit images with minors.

Plaintiffs contend that these design failures turned what should have been a child-friendly experience into a global risk for grooming and trafficking.

Numerous lawsuits reference the Child Online Privacy Protection Act (COPPA) and the Trafficking Victims Protection Act (TVPA), two federal laws aimed at preventing child exploitation online.

Attorneys for victims claim that Roblox’s and Discord’s business models encouraged user growth without adequate screening, leading to grooming that violated these statutes. These claims seek to hold both corporations liable for ignoring known dangers within their ecosystems.

Parents say Roblox misrepresented their platforms as “safe for kids” while allowing predators to operate freely. This deceptive marketing practice forms the basis of additional claims for consumer fraud and negligent misrepresentation.

Families argue that the companies knowingly concealed the risks of inappropriate content and unsafe interactions, persuading millions of parents to trust a system that failed to deliver on its safety promises.

Another common allegation is that Roblox failed to warn parents about the high risk of exploitation or to remove dangerous users once reports were filed. Each Roblox lawsuit filed under this theory argues that the company had access to moderation data and chat logs showing predatory patterns but refused to act.

This inaction, families claim, directly contributed to the abuse and compounded their children’s emotional distress.

Defense attorneys often cite Section 230 of the Communications Decency Act, which shields online companies from liability for user-generated content. However, plaintiffs maintain that Roblox went far beyond passive hosting by designing interactive features that enabled and monetized unsafe behavior.

Our attorneys believe that this conduct strips them of legal immunity, allowing families to pursue meaningful accountability through civil sexual assault lawsuits.

All content undergoes thorough legal review by experienced attorneys, including Jonathan Rosenfeld. With 25 years of experience in personal injury law and over 100 years of combined legal expertise within our team, we ensure that every article is legally accurate, compliant, and reflects current legal standards.